Taejong Joo

I am a final-year PhD student in the Department of Industrial Engineering & Management Sciences at Northwestern University, where I am fortunate to work with Diego Klabjan.

My aspiration is to develop AI systems with human-level adaptability and computational properties aligned with human preferences. Such AI systems would enable effective collaboration between humans and machines to tackle evolving real-world challenges that neither could solve alone. With this ambition, I’m currently working on post-training large language models, model adaptation, and robust machine learning.

Previously, I obtained my bachelor’s and master’s degrees at Hanyang University, where I worked on human-machine interactions. My research on formalizing human-machine interactions to enable adaptive automation under safety constraints was featured among the top 50 most popular articles in IEEE Transactions on Human-Machine Systems.

Email: taejong.joo [at] northwestern.edu

Industrial Experience

2025.03~2025.06 / 2025.09~2025.10

Student Researcher

Optimization algorithm for training large-language models.

2025.06~ 2025.09

Research Intern

Multi-agent system for long context modeling.

2018.01 ~ 2021.05

Deep Learning Researcher (Senior from 2021.01)

Offline reinforcement learning for financial portfolio optimization, scalable variational inference for uncertainty estimation, distribution shifts, and model compression.

Selected Research

For the full list of my publications, visit my Google Scholar.

Preprint, 2025

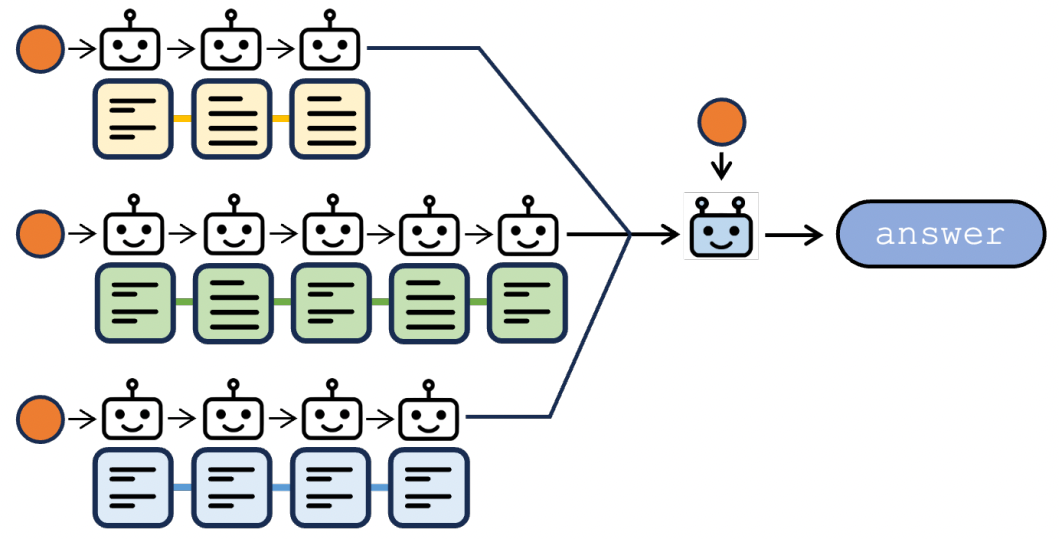

We develop Graph of Agents, a multi-agent system that expands the context window of large language models by orders of magnitude without any additional training. By framing long context modeling as a compression problem, GoA dynamically builds an optimal multi-agent collaboration structure tailored to the input. This principled approach eliminates the need for complex prompt engineering and specialized multi-agent system designs.

Neural Information Processing Systems (NeurIPS), 2025

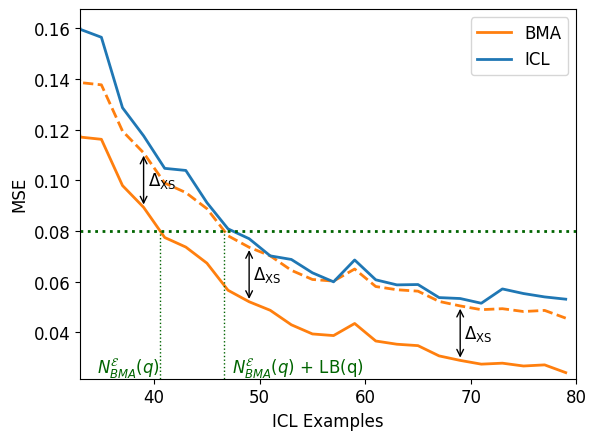

We demonstrate that the convenience of in-context learning, which enables adaptation to a new task by conditioning on demonstrations in an input prompt, comes with a hidden cost. While it matches the efficiency of a Bayes-optimal estimator in few-shot settings, we prove its statistical efficiency fundamentally diminishes over long contexts. This reveals a critical trade-off between ICL's flexibility and its long-term statistical efficiency.

Neural Information Processing Systems (NeurIPS), 2024

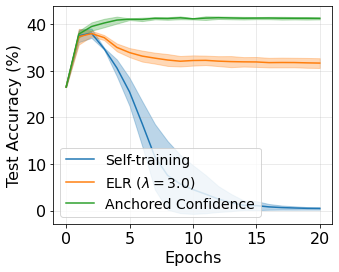

We prove that selectively promoting temporal consistency for confident predictions significantly enhances self-training performance under distribution shifts. This approach prevents the common issue of model collapse—where performance deteriorates after a few epochs of self-training—resulting in improved performances with attractive robustness properties.

International Conference on Machine Learning (ICML), 2024

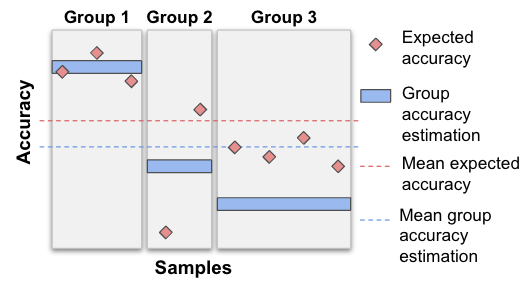

We introduce a new approach for simultaneously addressing model calibration and model selection in unsupervised domain adaptation: estimating the average accuracy across subpopulations. For efficient and accurate subpopulation accuracy estimation, we formulate the high-dimensional importance weight estimation problem into a more tractable coordinate-wise convex optimization problem.

International Conference on Machine Learning (ICML), 2020

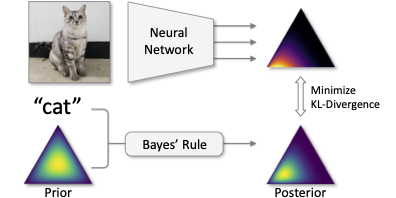

We propose a scalable variational inference framework using a last-layer Dirichlet model as a new alternative to Bayesian neural networks. Our approach significantly enhances uncertainty representation ability of deterministic neural networks while preserving their strong generalization performances and efficiency unlike Monte Carlo dropout and deep ensembles.

Misc.

Guided by first principles and the elegance of Occam’s razor, I believe simplicity often reveals the deepest insights and leads to effective and versatile solutions (with far fewer headaches).

Outside of work, I enjoy experimenting in the kitchen as a self-proclaimed master chef (enthusiastically endorsed by my wife), playing tennis, splashing paint on canvas, and traveling.

Fun fact: My Erdős Number = 3: Taejong Joo -> Diego Klabjan -> Craig Tovey -> Paul Erdős.